Resources

The USC Mark and Mary Stevens Neuroimaging and Informatics Institute is housed in a state-of-the-art facility designed specifically to accommodate our research. The overall academic environment is a remarkable specially designed modern building of 35,000 gross sq ft. called Stevens Hall for Neuroimaging (SHN) on the Health Sciences Campus. The facility houses a data center, a state-of-the art theater, offices, and workspaces. The allocation of significant campus funding to create this Institute, and its facilities, creates the opportunity to establish a broad and rich multidisciplinary expertise. The Keck School of Medicine has dedicated significant campus resources, capital infrastructure and space to LONI and the Stevens Neuroimaging and Informatics Institute.

THE INSTITUTE

SHN houses nearly 100 faculty, postdocs, students and staff in a variety of seating configurations from private offices to shared offices to open workspaces designed for collaboration. Great care was taken to design the people space to facilitate ongoing discussion and collaboration while providing a peaceful work environment for all.

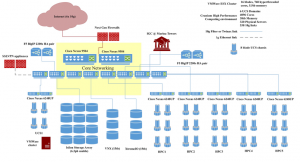

The Institute provides an extensive infrastructure designed and operated to facilitate modern informatics research and support for hundreds of projects including several multi-site national and global efforts. We have redundancies built in to all equipment, and a completely secure facility to protect equipment and data. The resources described below provide networking, storage and computational capabilities that will ensure a stable, secure and robust environment. It is an unprecedented test bed to create and validate big data solutions. Because these resources have been designed, built and continuously upgraded over the years by our systems administration team, we have the appropriate expertise and operating procedures in place to use these resources to their maximum benefit.

Data Center Infrastructure

Data Center Physical Site Security

Computational and Storage Resources

Rapid advancements in imaging and genetics technology have provided researchers with the ability to produce very high-resolution, time-varying, multidimensional data sets of the brain. The complexity of the new data, however, requires immense computing capabilities.

The compute infrastructure within the datacenter boasts 4,096 cores and 38 terabytes of aggregate memory space. This highly available, redundant system is designed for demanding big data applications. Blades in the Cisco UCS environment are easy to replace. A failing blade sends an alert to Cisco where a replacement ticket is generated automatically. Upon arrival, the new blade can go from the shipping box to being fully provisioned and in production in as little as 5 minutes.

The Cisco UCS blade solution described above allows the Institute to run the services of a much larger physical infrastructure in a much smaller footprint without sacrificing availability or flexibility. Each Cisco chassis hosts 8 server blades and has 160 gigabits of external bandwidth available per chassis. Each of the 48 racks can hold up to 6 chassis plus requisite networking equipment (4 fabric extenders). Thus, the new data center has adequate rack space to accommodate this project.

Institutions and scientists worldwide rely on the Institute’s resources to conduct research. The Stevens Neuroimaging and Informatics Institute is architected using a fault-tolerant, high-availability systems design to ensure 24/7 functionality. The primary storage cluster is 51 EMC Isilon nodes with 5.3 usable petabytes of highly available, high performance storage. The Image & Data Archive has a secondary cluster of 9 Isilon nodes, coming to approximately 1 petabyte usable. A third three-node Isilon cluster of approximately 320 terabytes is used to test volatile and functionally aggressive environments. A 3 node Cisco UCS C3260 storage cluster provides 1 petabyte of storage running an operating system and filesystem tailored specifically for large informatics workflows.

The Institute recently upgraded its storage and compute resources with a new Dell ECM Unity XT 480F All-Flash Storage unit and a 52-node Dell EMC Isilon A2000 NAS Storage cluster. The current total storage capacity of the data center is now more than 18 petabytes. Data in these clusters moves exclusively over 10g links excepting node-to-node communication in the Isilon clusters which is handled by QDR Infiniband, providing 40 gigabit bidirectional throughput on each of the Isilon clusters’ 126 links. Fault tolerance is as important as speed in the design of this datacenter. The Isilon storage clusters can each gracefully lose multiple nodes simultaneously without noticeably affecting throughput or introducing errors. The virtualization environment uses a 15 terabyte EMC XtremIO all-flash storage array. An EMC VNX 15 terabyte SAN cluster with tiered solid state disk storage complements the storage environment, providing another avenue of redundant storage offered across differentiated networking to provide an additional layer of resilience for the data and virtualization infrastructure.

External services are load balanced across four F5 BIG-IP 2200S load balancers. The F5 load balancers provide balancing services for web sites and applications, as well as ICSA-certified firewall services. The core network is entirely Cisco Nexus hardware. Each of the two Cisco Nexus 9504s supports 15 terabits per second of throughput. Immediately adjacent to this machine room is a user space with twelve individual stations separated by office partitions. These workspaces are manned by staff who constantly monitor the health of the data center as well as plan for future improvements. Each space is also equipped with a networked workstation for image processing, visualization and statistical analysis.

Network Resources

Service continuity, deterministic performance and security were fundamental objectives that governed the design of the network infrastructure. The Institute’s intranet is architected using separate edge, core and distribution layers, with redundant switches in the edge and core for high availability. While ground network connectivity is entirely Gigabit, server data connectivity is nearly all 10 Gigabit fiber and Twinax connected to an array of Cisco 9372 distribution switches, numerous Cisco Nexus 6628 switches, and Cisco Nexus 2248 fabric extenders. For Internet access, the Institute is connected to the vBNS of Internet2 via six fiber optic Gigabit lines using a variety of route paths to ensure that the facility’s external connectivity will be maintained in the case of a single path failure.

The facility has two next generation firewall appliances providing network security and deep packet inspections. The Stevens Neuroimaging and Informatics Institute has also implemented virtual private network (VPN) services using SSLVPN and IPsec services to facilitate access to internal resources by authorized users. A VPN connection establishes an encrypted tunnel over the Internet between client and server, ensuring that communications over the Web are secure.

The Institute has an extensive library of communications software for transmitting data and for recording transaction logs. The library includes software for monitoring network processes, automatically warning system operators of potential problems, restarting processes that have failed, or migrating network services to an available server. For instance, the laboratory has configured multiple web servers with Linux Virtual Server (LVS) software for high-availability web, application, and database service provisioning as well as load balancing. A round-robin balancing algorithm is currently used such that if the processing load on one server is heavy, incoming requests, be it HTTP, JSP, or MySQL, are forwarded to the next available server by the LVS software layer. Listeners on one virtual server monitor the status and responsiveness of the others. If a failure is detected, an available server is elected as master and it assumes control and request forwarding for the entire LVS environment.

To further enhance the security in the Image and Data Archive (IDA) ecosystem, IDA resources reside in a discrete network which isolates these resources from the overall INI network. This both simplifies access control management and increases the efficacy thereof.

Offsite and Onsite Backup Resources

Virtualized Resources

Due to the rate that new servers need to be provisioned for scientific research, the Institute deploys a sophisticated high availability virtualized environment. This environment allows systems administrators to deploy new compute resources (virtual machines or VM’s) in a matter of minutes rather than hours or days. Furthermore, once deployed these virtualized resources can float uninhibitedly between all the physical servers within the cluster. This is advantageous because the virtualization cluster can intelligently balance virtual machines amongst all the physical servers, which permits resource failover if a virtual machine becomes I/O starved or a physical server becomes unavailable. The net benefit for the Institute is more software resources are being efficiently deployed on a smaller hardware footprint, which results in a savings in hardware purchases, rack space and heat expulsion.

The software powering the virtualized environment is VMware’s ESX 6. This is deployed on eight Cisco UCS B200 M3 servers and eight Cisco UCS B200 M4 servers. The B200 M3 servers each have sixteen 2.6/3.3 GHz CPU cores and 128GB of DDR3 RAM. The B200 M4 servers each have 64 2.3/3.6 GHz CPU cores and 512GB of DDR4 RAM. These sixteen servers reside within a number of Cisco UCS 5108 blade chassis with dual 8x 10 Gigabit mezzanine cards providing a total of 160 Gigabits of available external bandwidth per chassis. Storage for the virtualization cluster is housed on the aforementioned Isilon clusters, XtremeIO, and VNX storage arrays. The primary bottleneck for the majority of virtualization solutions is disk I/O and these storage arrays more than meet the demands of creating a highly available virtualized infrastructure whose capabilities and efficiency meet or greatly exceed those of a physical infrastructure. A single six rack unit (6RU), eight blade chassis can easily replicate the resources of a 600+ server physical infrastructure when paired with the appropriate storage solution such as the Isilon storage cluster.

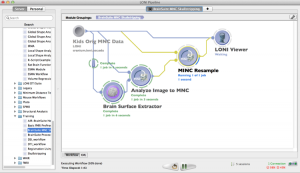

Workflow Processing

To facilitate the submission and execution of compute jobs in this compute environment, various batch-queuing systems such as SGE (https://arc.liv.ac.uk/trac/SGE) can be used to virtualize the resources above into a compute service. A grid layer sits atop the compute resources and submits jobs to available resources according to user-defined criteria such as CPU type, processor count, memory requirements, etc. The laboratory has successfully integrated the latest version of the LONI Pipeline (http://pipeline.loni.usc.edu) with SGE using DRMAA and JGDI interface bindings. The bindings allow jobs to be submitted natively from the LONI Pipeline to the grid without the need for external scripts. Furthermore, the LONI Pipeline can directly control the grid with those interfaces, significantly increasing the operating environment’s versatility and efficacy, and improving overall end-user experience.

Visualization Resources

The Scientific Visualization Group is a team of scientists, artists, designers and programmers who present information in an accurate, intuitive and graphically innovative manner. The group translates the results of analyses conducted by LONIR and our collaborators into sophisticated scientific visualizations and animations. By communicating our research in a visually compelling way, the group represents a crucial component of LONIR.Visit our gallery to view a showcase of work from the Scientific Visualization Group.

The Scientific Visualization Group is a team of scientists, artists, designers and programmers who present information in an accurate, intuitive and graphically innovative manner. The group translates the results of analyses conducted by LONIR and our collaborators into sophisticated scientific visualizations and animations. By communicating our research in a visually compelling way, the group represents a crucial component of LONIR.Visit our gallery to view a showcase of work from the Scientific Visualization Group.

Stevens Hall for Neuroimaging also houses a 50-seat high definition theater – the Data Immersive Visualization Environment (DIVE). The prominent feature of the DIVE is a large curved display that can present highly detailed images, video, interactive graphics and rich media generated by specialized research data. The DIVE display features a dominant image area, with consistent brightness across the entire display surface, high contrast, and 150° horizontal viewing angle. The display resolution target is 4k Ultra HD, 3840×2160 (8.3 megapixels), in a 16:9 aspect ratio. The DIVE is designed to facilitate research communication, dissemination, training and high levels of interaction.

Siemems Prisma 3T and Terra 7T MRI Scanners

The Magnetom Prisma 3T MRI is a high performance 3T based on the Trio system. It allows for unparalled simultaneous 80mT/m @ 200T/m/s gradients. Its 60 cm bore diameter allows for magnet homogeneity at 40 cm DSV – 0.2 ppm. With 64 – 128 channel receive it is really the ultimate MRI scanner for neuroimaging. High performance gradients will allow for human connectomics. The Prisma offers as standard Diffusion Spectrum Imaging up to 514 diffusion directions allowing characterization of crossing fibers. Higher resolution DTI with its reduced field of view – ZOOMit DTI for isotopic DTI. Higher SNR will also allow for reproducible 3D ASL perfusion and improved resting state fMRI data.

The new Magnetom Prisma is also FDA approved now for clinical imaging. This is important to allow the data acquisition for patients with neurological diseases. This includes but is not limited to patients with Alzheimer’s Disease, Parkinson’s Disease, Multiple Sclerosis, Stroke, and Brain Tumor patients. The defining features of this scanner are the powerful XR Gradients, which have a strength of 80 milliTesla per meter (mT/m) combined with a 200T-per-meter-per-second (T/m/s) slew rate. Furthermore, it employs advanced homogeneity and shimming functions for optimal MRI data acquisition. This will be critical for reproducibility in longitudinal studies of patients and subjects over time such as those being studied by the USC ADRC and ADNI.

The Human Connectome Project is already working on methods for improved mapping of the Connectome such as Multi-shell HARDI reconstructed with a Fiber Orientation Density methodology. This will be enhanced to have the gradient performance of the 3T Prisma.

The USC Siemens Terra 7T MRI consists of a magnet system which generates the ultrahigh magnetic field. The electrical and mechanical shim are integrated in the gradient coil. The magnet comprises the superconducting magnet including the system for cooling (interface for helium fill/refill, cold head), energizing (current probe) and monitoring the magnet during operation. In addition, it includes the cabling up to the point where the external lines are connected. The system consists of an actively shielded highly homogeneous superconducting magnet (7.0 Tesla) housed in an 830 mm horizontal room temperature bore, low-loss helium cryostat and zero helium boil-off technology. Field shimming is primarily accomplished using superconducting shim coils. Final shimming is performed with a small number of passive shims. The system is supplied with a helium level monitor and an emergency quench heater control unit. A two-stage 4.2 K cryo refrigerator cooling system is employed, consisting of two independent cryocooler systems that eliminate the static cryogenic consumption.

Gradients & RF Receiver Technology. The gradient system essentially consists of a Gradient Power Amplifier (GPA) and a Gradient Coil (GC), both water cooled to sustain a high duty cycle. The Magnetom 7T uses the GPA model as is used on other Magnetom systems. The XR gradients are the same as Prisma and capable of 80mT/m @ 200T/m/s gradients. The gradient coil includes the full set of 5 second order electrical shim coils to adjust B0 homogeneity for patient and each measurement volume. The Siemens RF receiver technology has 64 channels receive and the USC 7T includes a Nova Medical 32 channel head coil. The system also has an 8–channel parallel RF transmit array (pTx). 3rd order shims provide four out of the seven possible 3rd order shims that are built into the SC72 gradients, along with 2nd 4-fold SPS cabinet, wiring for 3rd order shim, electric infrastructure expansion, SW interface to drive additional 3rd order shims.

The CIA is also equipped with a variety of patient monitoring and stimulus equipment. Patient physiologic monitoring is achieved with either a BIOPAC MP150 system or a Phillips Invivo Expression Patient Monitor (MR400). Stimulation equipment includes a Cambridge Research Systems BOLDscreen LCD display for fMRI and a Current Designs 4-button response box pad. Contrast is delivered via a Medrad Spectris Solaris EP Injector.

Additional Office Spaces

Resource Capabilities

LONIR develops and disseminates to the community computational tools for neuroimaging and brain mapping. In addition, the resource provides state-of-the-art grid computing resources, data storage, and archival to all of our collaborators.

Visit our atlases and software pages for details on those resources. Click the links below to visit the Image and Data Archive and the Center for Image Acquisition.

LONI IMAGE AND DATA ARCHIVE

The LONI Image & Data Archive (IDA) is a secure system for the archival of neuroimaging data that protects subject confidentiality by restricting access to authorized users. IDA provides an interactive and secure environment for storing, searching, updating, sorting, accessing, tracking and manipulating neuroimaging and relevant clinical data. Archiving data is simple, secure and only requires an internet connection. The archive provides flexibility in establishing metadata and can accommodate one or more research groups, species, or sites.

IDA is a simple yet effective tool for secure data transmission and storage, archiving neuroimaging data, integrating data de-identification and download functionality. The integrated de-identification engine de-identifies all major medical image file formats (DICOM, GE, NIfTI, Minc, HRRT, ECAT), and the integrated translation engine allows users to download images in the file format of their choice. The system rests upon a fail-safe and redundant architecture with all data backed up daily.

IDA works for small collaborations as well as large, multi-site studies. More than 50,000 images have been archived in IDA by investigators from scores of institutions worldwide. You must register to access the IDA.

CENTER FOR IMAGE ACQUISITION

The Center for Image Acquisition (CIA) combines two of the world’s most advanced MRI scanners with dedicated supercomputing systems, cutting-edge analysis techniques, and industry-leading expertise to image, map and model the structure and function of the living human brain with unprecedented detail.